I like the way you move

In computer graphics, photorealism drives the field toward a goal that everyone can relate to and evaluate. Other areas of computing, like user interface design, have harder goals to define. I’ll look at the example of computer animation tools.

1. Photorealism

Here’s a nice video about the making of the movie Jurassic Park:

The amount of work and invention required to produce convincingly looking dinosaurs is staggering. And it was done in 1993!

I think computer graphics are fortunate to have a clearly defined benchmark. In the physical world, we all have an intimate experience of how light, materials and living creatures behave. Our brain is extremely good at spotting inconsistencies. The public wouldn’t settle for less than convincingly looking pictures. That sets a very high bar for the visual effects teams, and drives formidable invention in the field.

Other computing areas where the upper bar isn’t defined precisely, or for which we don’t have a natural equivalent, are much harder to drive up. This includes the very way we use computers to do graphics (the user interface and the graphics programming languages), as well as everyday software we use to share and communicate.

2. Defining other goals

What would be the equivalent of photorealism for user interface design? We could think of “body realism” or “cognitive realism”: the idea that we should match our universal motor and cognitive abilities, and work out how we can best interface with the hand-eye coordination or temporal pattern detection that we can naturally perform.

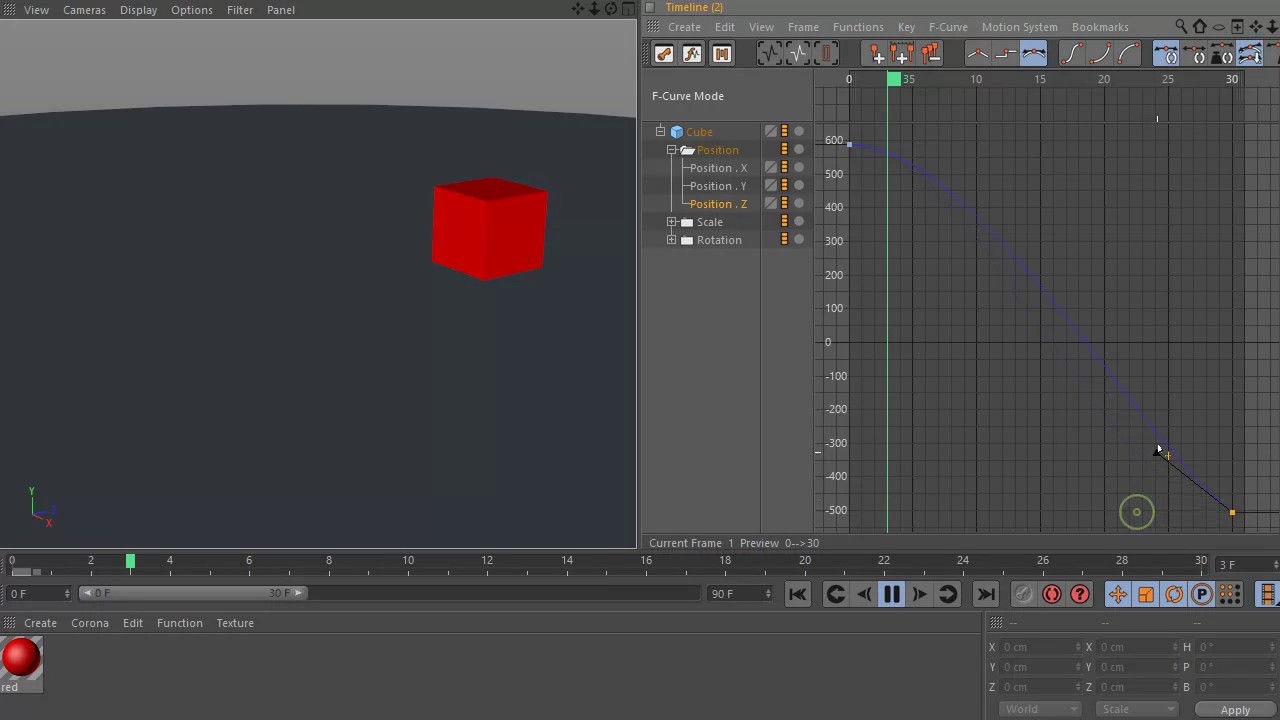

For example, animators are mental dancers who have an intimate sense of timing. They can capture the nuances of body language and common physical behavior. But in practice, they have to lay out their intuition over a spatial representation of time, and learn abstract animation curves to express the inner dancing that naturally comes to them.

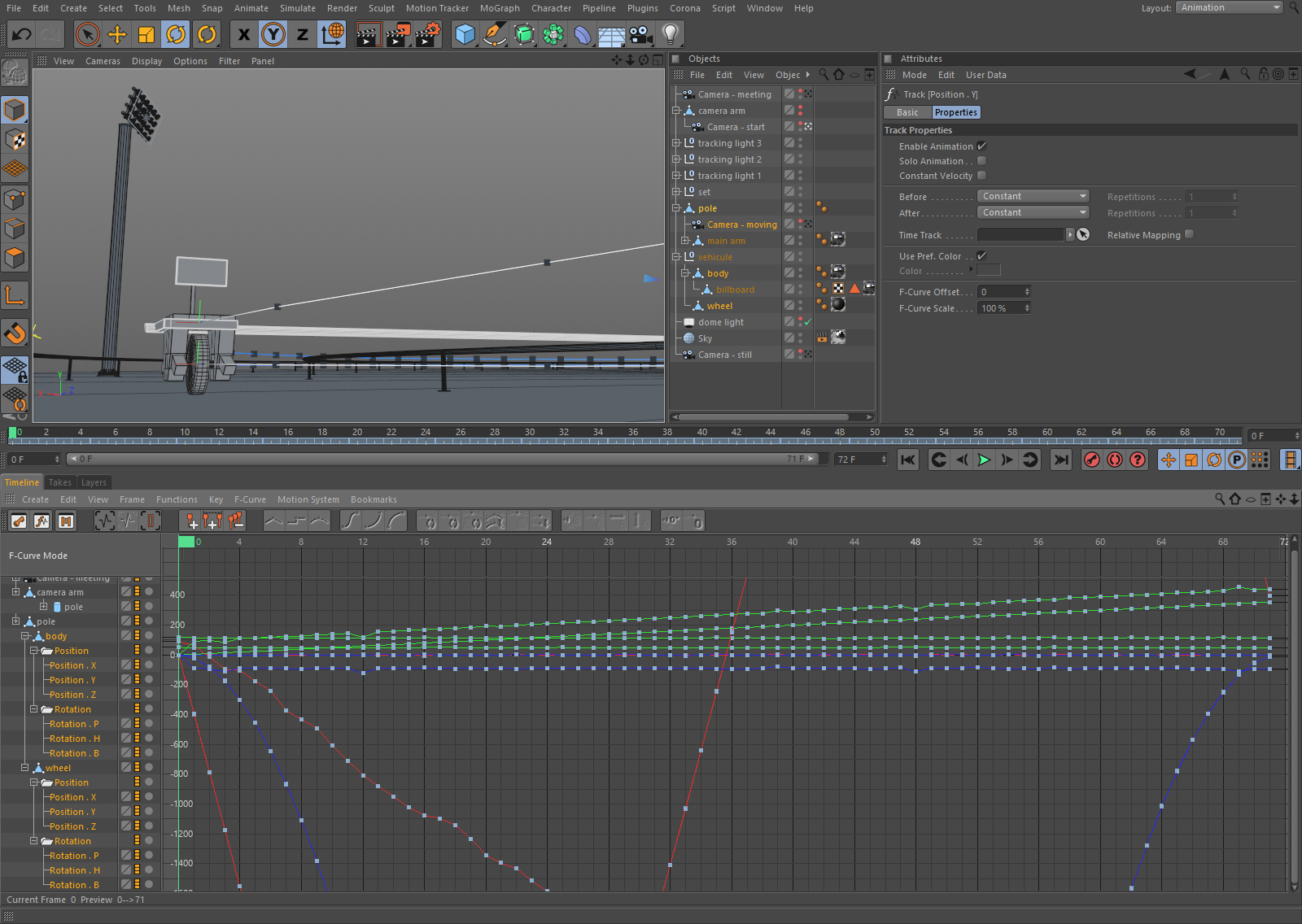

When I see the tool above, I am impressed by its elegance and power. However, when working on actual shipping projects, animation curves are more likely to look like this:

The animator has to handle the abstraction of representing time over space, and deal with the mental complexity that the representation quickly grows into. Learning how to use professional animation tools in order to ship anything interesting is a huge effort—no matter how much the big names in the animation industry downplay it as “just” a tool.

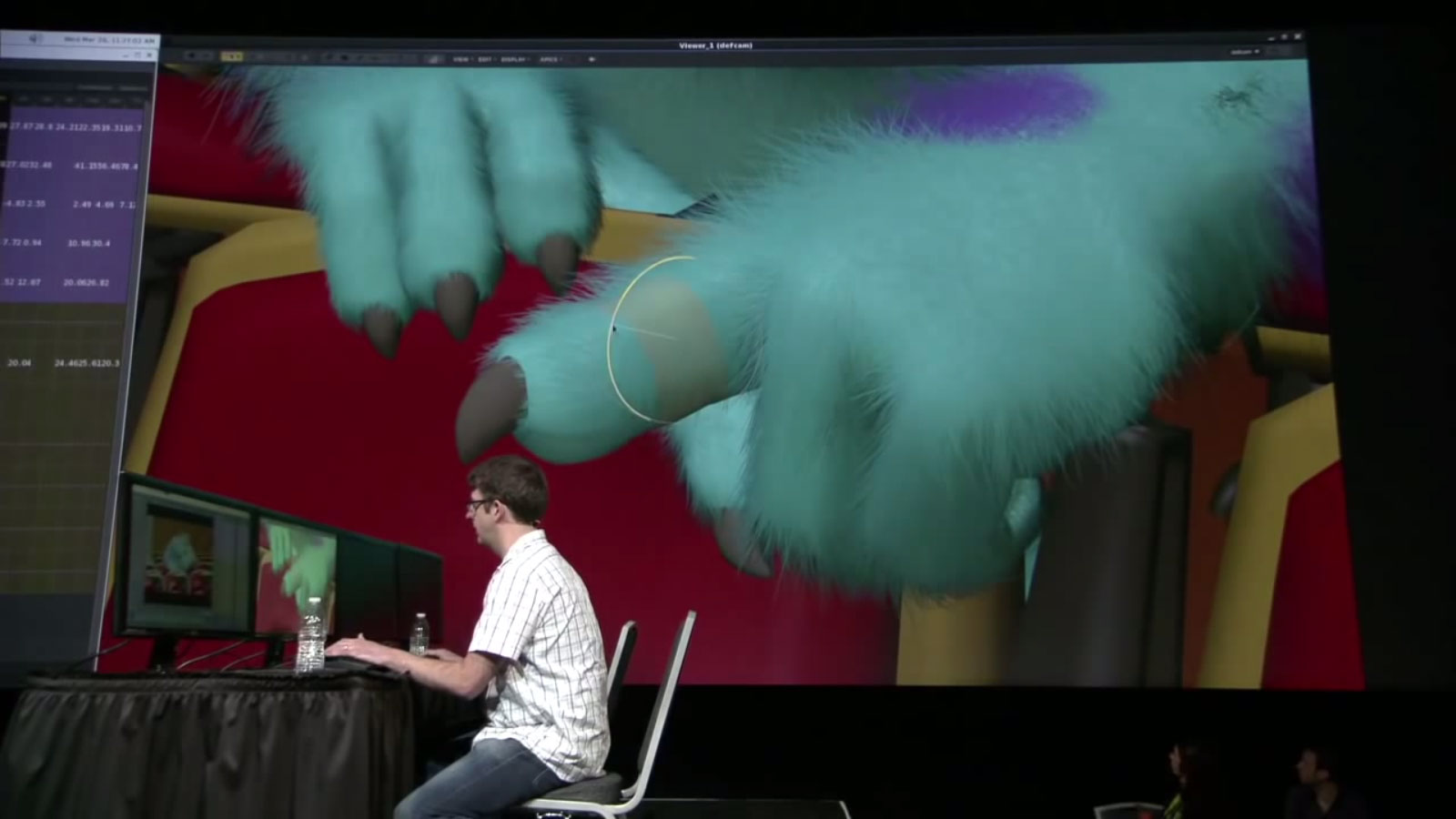

One of the magicians behind Jurassic Park, Phil Tippets, who was in charge of the animatronics and stop-motion animation, had this to say about digital computer animation:

We [in the stop-motion animation team] are used to actually walking up to the puppet, making each of these moves by hand. I’m not used to sitting down at a keyboard and having to hit buttons. It’s kind of like animating with boxing gloves on.

Tippets laments about the indirection of animating a digital puppet compared to animating a physical puppet. But that only calls for the opposite problem: animators have to animate their puppets, whether digital or physical, down to the rotation angle of the fingers joints. In real life, when we reach to grasp a glass of water, we don’t do so with an explicit knowledge of our fingers rotation angle. Instead, these details are implicitly integrated by our motor abilities, and we act on them on a higher level of abstraction. It is that level that’s meaningful to us and to the audience of animated pictures.

So it looks like the animation process happens at either one layer of abstraction above or below the layer that’s actually meaningful to the animator—without ever being at the sweet spot. I wonder if this constant up and down the ladder of abstraction is a fundamental limit, i.e. this is what makes an animator an animator, or an arbitrary limit, i.e. this is how the current animation systems work.

Does it make sense to think of an animation system where we direct characters by instructing meaning, instead of tediously manipulating single components? If I want to invoke rhythms like “3, 2, 1, go!” or “↗↘↗↘↗↘”, how would I tell the computer what to do in terms of what I want to do?

References

- CineFix. Jurassic Park’s T-Rex Paddock Attack (YouTube), 2017.

- Eric Kron. Tauntauns to Tyrannosaurus: Evolution of Effects Animation, 2010.

- Ronald M. Baecker. A Conversational Extensible System for the Animation of Shaded Images (PDF), 1976. This paper is about SHAZAM, which was an animation system made at Xerox PARC. I stole two formulations from the paper:

It’s essential that a computer animator develop an ability to sense which aspects of a system’s limitations are arbitrary and which are fundamental

(p6), andIt was impossible to explain to the animator what I was doing in terms of what he was doing

(p6). - Bret Victor. Up and Down the Ladder of Abstraction, 2011.